Jira Workflows vs AI Agents: Eight Processes That Moved On

I spent years building Jira workflows. Custom statuses, validators, post-functions, conditions — the whole machinery. Some of them were elegant. Most of them worked. All of them eventually became obstacles.

This is not a rant about Jira. It is a diagnosis.

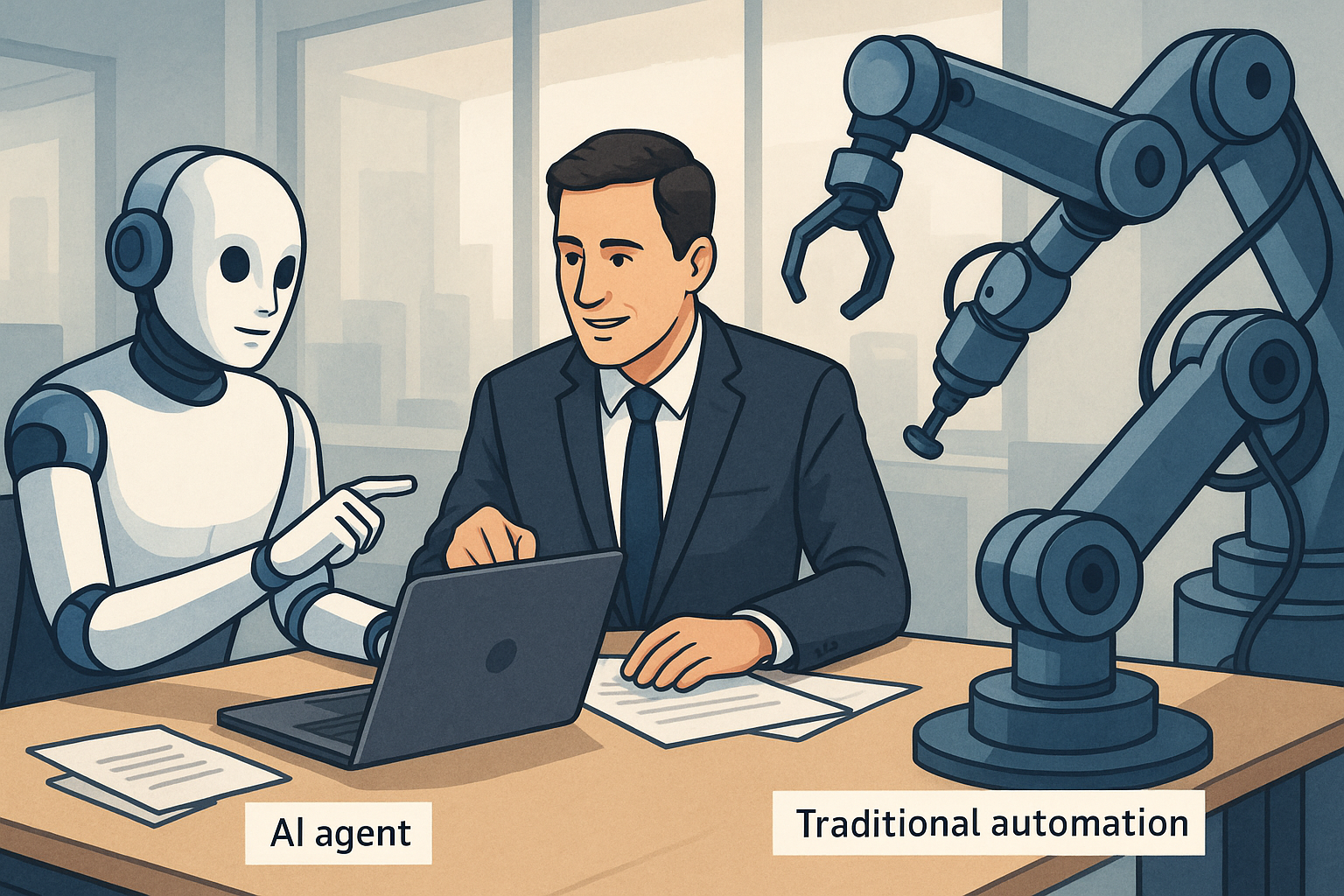

The distinction is simple once you see it: Jira Workflows excel at controlling statuses. AI agents excel at handling meaning. Many processes we forced into status transitions were never really about statuses. They were about thinking, context, and judgment. We just didn't have anything else.

Now we do.

The Core Difference

Here is the thesis, stated plainly:

Jira Workflows track where something is. AI agents understand what something is.

For years, we disguised cognitive work as status changes. A task moved from Draft to Refined — but refinement happened in people's heads, not in the transition. The workflow captured the outcome. It never participated in the process.

Agent environments like Claude Code or Codex change this. They don't just record that refinement happened — they help you refine. They ask questions, identify gaps, propose alternatives. They are participants, not ledgers.

This matters for more processes than you might expect.

1. Grooming and Refinement

What Jira did: A task sat in Draft. Someone wrote requirements. Others commented. Eventually, someone moved it to Refined. Checklists ensured certain fields were filled.

What actually needs to happen: Analysis of requirements. Identification of contradictions. Clarification of scope. Discovery of hidden assumptions.

Why agents win: An AI agent engages in dialogue. It asks clarifying questions before you realize they matter. It proposes acceptance criteria instead of waiting for you to remember them. It catches logical gaps while you're still typing.

Jira workflow: imitation of thinking. Agent: actual thinking.

2. Definition of Ready and Definition of Done

What Jira did: Mandatory custom fields. Checkbox validators. Transition conditions that blocked movement until every box was ticked.

What DoR/DoD actually requires: Contextual judgment. Is this feature story ready? It depends on the architecture, the team's familiarity with the domain, the current sprint load, and a dozen other factors no checkbox can capture.

Why agents win: An agent analyzes the task description, compares it against your codebase, considers the task type, and renders a reasoned verdict. Not "all fields filled" — but "this is ready because X, Y, and Z."

Jira checks form. Agents check substance.

3. Technical and Architecture Review

What Jira did: A status called In Review. An assigned reviewer field. Comments in the ticket. Eventually, a transition to Reviewed.

What review actually requires: Reading code changes. Understanding ripple effects. Comparing alternatives. Predicting failure modes.

Why agents win: An agent in a code environment sees the diff, knows the repository context, can simulate impacts, and suggests concrete improvements. It doesn't just witness the review — it conducts one.

Jira records that review happened. Agents perform the review.

4. Dependency Management

What Jira did: Linked issues. Blocks and Is blocked by. Manual updates when things changed. Periodic grooming sessions to catch stale links.

What dependencies actually require: Understanding implicit connections. Tracking how decisions in one area affect another. Detecting conflicts before they become blockers.

Why agents win: An agent can analyze code, roadmaps, and backlogs simultaneously. It recalculates dependencies dynamically. It warns about collisions before anyone is blocked — not after.

Jira: static model. Agents: living model.

5. Incident and Problem Management

What Jira did: An incident ticket type. An RCA template with mandatory fields. Manual analysis and documentation.

What post-mortems actually require: Reconstruction of events. Correlation between logs, commits, and decisions. Pattern recognition across incidents. Systemic root cause identification.

Why agents win: An agent reads logs, maps them to commits, identifies recurring patterns, and produces a coherent post-mortem narrative. It doesn't just store the incident — it understands it.

Jira holds the record. Agents find the meaning.

6. Change Management

What Jira did: RFC issue type. Approval workflow. Statuses like Proposed, Approved, Implemented. Governance through transition permissions.

What change management actually requires: Risk assessment. Cost-benefit analysis. Comparison of alternatives. Rollback planning.

Why agents win: An agent can model scenarios, estimate risks, propose phased rollouts, and generate rollback plans. It doesn't just control who approves — it helps determine what should be approved.

Jira manages permissions. Agents support decisions.

7. Knowledge Management and Documentation

What Jira did: Tasks titled "Update documentation." Links to Confluence pages. Reminder notifications. Perpetually outdated content.

What documentation actually requires: Generation from source changes. Automatic synchronization. Reflection of reality rather than intention.

Why agents win: An agent can update documentation based on code diffs, synchronize knowledge across repositories, and write living docs that track the system as it evolves.

Jira reminds you to document. Agents write the documentation.

8. Planning and Re-Planning

What Jira did: Backlogs. Story points. Velocity tracking. Sprint ceremonies. Endless refinement meetings.

What planning actually requires: Continuous hypothesis adjustment. Real-time adaptation to new information. Scenario modeling rather than fixed forecasts.

Why agents win: An agent can recalculate plans with every new input, incorporate actual progress data, and present scenarios instead of single-track predictions.

Jira records what was planned. Agents help you think about what comes next.

Where Jira Remains Useful

To be clear: Jira is not worthless.

It remains valuable as:

- A system of record for compliance purposes

- An audit trail that satisfies external stakeholders

- A status contract for parties who need simplified views

- A reporting surface for management dashboards

But these are administrative functions, not thinking functions. Jira excels at being a ledger. It was never meant to be a brain.

The Honest Diagnosis

If your Jira workflow:

- Spans more than five or seven statuses

- Contains stages named review, analysis, or approval

- Requires comments explaining why a transition happened

Then you have encoded cognitive work into a status machine.

That worked when there was no alternative. Now there is.

The path forward is not to abandon Jira. It is to recognize what it should and should not do. Let Jira hold decisions. Let agents help make them.

Jira became the skeleton we built our processes around — useful, structural, necessary. But skeletons don't think. And increasingly, our processes need to.

Key Takeaways

-

Status control and meaning-handling are different disciplines. Jira was built for the first. AI agents are built for the second.

-

Many workflows were cognitive work in disguise. Refinement, review, planning — these require judgment, not checkboxes.

-

The transition is not replacement but specialization. Jira as ledger, agents as reasoning partners. Each in its proper role.

The question is no longer whether this shift will happen. It is whether your organization will recognize it before the gap becomes too wide to close gracefully.